A team of scientists in Australia say that they have found a way to make cold brew coffee in less than three minutes using an ultrasonic reactor. This is a potentially massive deal because cold brew normally takes between 12 and 24 hours to brew, a problem for me, personally, when I do not carefully manage my cold brew stock. The lead scientist on the research team tells me he has also created a “cold espresso,” which is his personal favorite and sounds very intriguing.

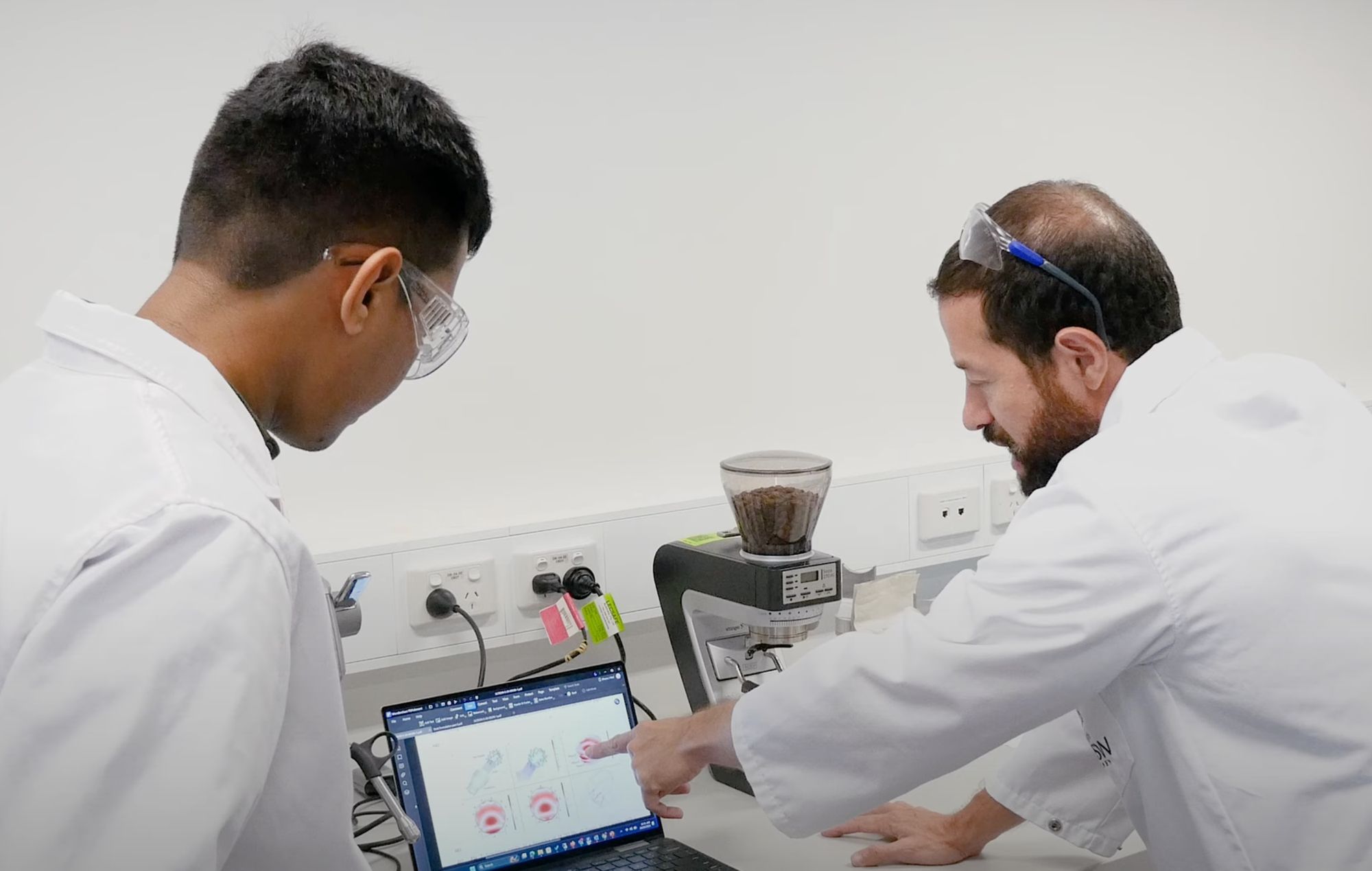

The researchers at the University of New South Wales Sydney claim that their ultrasonic extraction held up to a “sensory analysis” and blind taste tests by trained experts: “A sensory analysis was conducted to evaluate appearance, aroma, texture, flavor, and aftertaste, which demonstrated that coffee brewed for 1 and 3 min in the sonoreactor exhibited almost undistinguishable properties compared to a standard 24 hour [cold] brewing without ultrasound,” they write in a paper about the method in the journal Ultrasonics Sonochemistry.

For the uninitiated, cold brewed coffee is made by soaking coffee grounds in cold or room temperature water in large batches to create a concentrate that you can keep in the fridge for a week or two. Because the water is not hot, the extraction from ground coffee beans takes much longer than it does with traditional hot brewing. The resulting cold brew is less acidic, less bitter, and sweeter. This long brew time isn’t a problem if you plan ahead, but, as mentioned, if you do not plan ahead, you cannot really speed up the cold brew time while continuing to have cold brew. As lead author Francisco Trujillo notes in the paper, the resulting large batches of cold brew concentrate also take up a lot of counter and fridge space, meaning that not every coffee shop or restaurant has it on hand. This is a phenomenon I am very familiar with, as many establishments currently on my shitlist claim that they have “cold brew” that is actually hot coffee poured over ice.

Trujillo’s new method uses room temperature water in a normal espresso machine that has been modified to turn the boiler off (or down) and has been modified to add a device that hits the beans with ultrasonic waves at a specific frequency that makes the beans shake. In layman’s terms, they are blasting the beans with ultrasounds, which cause the beans to vibrate and its cell walls to burst, allowing the rapid extraction of coffee without heat. Trujillo explains in the paper that extraction happens because of “acoustic cavitation. When acoustic bubbles, also called inertial bubbles, collapse near solid materials, such as coffee grounds, they generate micro jets with the force to fracture the cell walls of plant tissues, intensifying the extraction of the intracellular content.”

Trujillo told me that he learned this was possible in a study he published in 2020, and set to “superimpose ultrasound in the coffee basket of an existing espresso machine. We purchased a few Breville espresso machines, opened them up, and started the journey. Mathematical modeling of the sound transmission system and of acoustic cavitation was key for the success of the design.” Some of that mathematic modeling is available in the paper here:

He said that they experimented with a variety of different frequencies, and said that frequencies between 20-100 kHz are all good at extracting coffee. “The lower the frequency, the larger the transducer and the horn,” he said. “If the frequency is in the low range, there are harmonics that can be heard. We worked at 28 kHz and at 38-40 kHz, and we chose 38-40 kHz as it was more compact and with a quieter performance.”

Essentially, his team was able to modify an existing Breville espresso machine to do this, and said that they experimented with different brew times, and water temperatures (104 degrees F, well below boiling, was the hottest they tried) and were able to create a variety of different cold extractions, including one that is not mentioned in the paper but which Trujillo told me about that he calls “cold espresso” and which he said are his "favorite ones" and “offer a unique sensory experience like nothing in the market. It is bold and rich in flavor and aroma, less bitter, and with a great acidity. It is more viscous and with a very nice finishing (according to coffee experts that have tried our brews). That will be a unique and novel coffee beverage to be appreciated by coffee lovers, hopefully worldwide.”

The various ultrasonic cold brews the team produced were tested by a team at the Queensland Alliance for Agriculture and Food Innovation by a group of “11 trained sensory panelists” who “had previously been screened of their sensory acuity.” They scored the ultrasonic extractions very similarly to real cold brew, though of course whether the ultrasonic coffee is actually “almost undistinguishable” from real cold brew will depend on each person’s taste.

I have long been interested in the science of coffee. When I was a freelancer, I went to Manizales, Colombia, to a national laboratory called “Cenicafe.” A scientist there called it the “NASA of Colombia,” referring to how seriously the institute takes the scientific pursuit of growing, roasting, and brewing ever-improving coffee. Cenicafe was easily one of the coolest places I’ve been in my life; they were genetically sequencing different species of coffee, hybridizing arabica and robusta coffee in attempts to create strands that taste good but are also resistant to both climate change and “coffee rust,” a fungus that regularly blights huge amounts of the coffee harvest in many countries, and were experimenting with new ways to brew coffee. I include this to say that, while inventing a new type of coffee brewing may seem frivolous, there is actually a huge amount of time, effort, and funding going into ensuring that there is ongoing innovation in coffee growing and brewing tech, which is particularly important considering that coffee plants are particularly susceptible to climate change.

Trujillo said that he plans to license the technology to coffee maker companies so that it can be used in both commercial coffee shops and in people’s homes.

“I love cold brew, and coffee in general,” he said. “I am Colombian and my grandfather had a business of buying coffee beans from the local producers, he then dried the beans under the sun on ‘costales’ (a traditional Colombian strong fabric) that he placed on the street. That was in Ortega, a little town in Colombia. There were other gentlemen like my grandfather who had the same business. So, during the season period, the streets of Ortega were filled with costales with coffee beans drying under the sun!”